Large data tables are an essential feature for many websites, but their complexity can easily lead to sluggish performance. High data volumes, inefficient queries, and unoptimized front-end rendering often create bottlenecks that damage both user experience and site performance.

Picture this: a visitor lands on your website, eager to explore your data-rich content. Instead of a seamless experience, they face endless loading spinners or laggy table interactions. The result? Frustration, increased bounce rates, and missed opportunities to engage or convert users.

In this blog, we’ll explore proven techniques to tackle these challenges head-on. From optimizing your database queries to leveraging advanced front-end strategies, you’ll discover actionable solutions to ensure your large data tables load quickly -keeping your site fast, efficient, and user-friendly.

Table of Contents

What are the Causes of Slow Loading Speeds?

Large data tables can be a nightmare for website performance when the root causes of slow loading go unaddressed. Let’s break down the key issues, why they occur, and how they impact your business:

High data volume

What causes it?

Large datasets with thousands (or even millions) of rows and columns often come from data-heavy operations like financial records, customer logs, or inventory databases. When all this data is loaded at once, it creates a significant strain on server resources, causing slower responses.

Impact on business:

A slow-loading table can frustrate users, especially those who need quick access to information. For example:

- E-commerce sites may lose customers who abandon their cart due to slow product filters.

- Data-driven dashboards might see reduced engagement as users opt for faster alternatives.

- Search engines penalize slow websites, leading to lower search rankings and reduced visibility.

Inefficient database queries

What causes it?

Poorly written SQL queries, missing indexes, or an overloaded database can dramatically slow data retrieval. If the database has to scan every row to return results (a “full table scan”), performance drops exponentially as your data grows.

Impact on business:

- Delayed response times: A sluggish database can bring down the performance of your entire site, not just the table.

- Operational inefficiency: Teams relying on up-to-date data for decision-making may face delays, disrupting workflows.

- Higher hosting costs: Inefficient queries demand more server resources, leading to potential overages or the need for expensive upgrades.

Heavy front-end rendering

What causes it?

When a table attempts to render thousands of rows at once in the browser, it can overwhelm client-side resources. This is common in tables built without optimizations like lazy loading, virtual scrolling, or efficient libraries. Additional factors like bloated CSS and JavaScript files compound the problem.

Impact on business:

- Poor user experience: Users experience freezing, lag, or even browser crashes, which can erode trust in your site.

- Increased bounce rates: Visitors won’t wait for a slow page; they’ll leave and potentially head to competitors.

- Lost revenue opportunities: Whether it’s an analytics dashboard or a pricing comparison page, users leaving early means fewer conversions or sales.

Identifying which of these factors is at play on your site is the first step toward optimization. With tools like performance profilers, query analyzers, and front-end inspection tools, you can pinpoint the bottlenecks and apply targeted fixes.

Backend Optimization Techniques for Large Data Sets

Addressing backend inefficiencies is critical for ensuring large data tables load quickly. By optimizing how data is stored and retrieved, you can significantly reduce loading times and improve overall site performance. Here are the key techniques to focus on:

1. Indexing

What it is:

Indexing is the process of creating a data structure that allows the database to find rows faster, much like a book’s index helps you locate topics quickly.

How it helps:

- Speeds up query execution by reducing the amount of data the database scans.

- Essential for columns that are frequently used in filters, searches, or joins.

Example:

For a table tracking sales data, adding an index to the “order_date” column allows quick retrieval of records within a specific date range.

Implementation tips:

- Focus on columns used in WHERE clauses, ORDER BY, or JOIN statements.

- Avoid over-indexing, as excessive indexes can slow down insert or update operations.

2. Data partitioning

What it is:

Partitioning divides a large dataset into smaller, more manageable chunks based on criteria such as date, region, or category.

How it helps:

- Reduces the amount of data scanned during queries by targeting only relevant partitions.

- Improves performance for large, frequently queried tables.

Example:

A sales database can be partitioned by year, so querying “2023 sales” involves scanning only that year’s data rather than the entire dataset.

Implementation tips:

- Use partitioning for datasets with logical divisions (e.g., time series data).

- Ensure queries are written to leverage partitions effectively.

3. Caching

What it is:

Caching temporarily stores frequently accessed data so the database doesn’t need to reprocess the same queries repeatedly.

How it helps:

- Reduces database load by serving data from memory or a cache layer.

- Provides near-instant responses for repetitive queries.

Example:

A WordPress dashboard displaying weekly analytics can cache the results instead of running complex queries each time the page loads.

Implementation tips:

- Use database-level caching tools (e.g., Memcached, Redis).

- Implement query caching for recurring data requests.

4. Database optimization

What it is:

Optimizing how data is stored and queried ensures your database operates efficiently.

How it helps:

- Prevents slow queries and excessive resource usage.

- Improves scalability as your dataset grows.

Example:

Optimizing an e-commerce database might involve normalizing data to reduce redundancy or denormalizing when faster lookups are needed.

Implementation tips:

- Optimize SQL queries by avoiding SELECT *. Only retrieve the necessary columns.

- Choose efficient data types (e.g., using integers instead of strings for IDs).

- Regularly analyze query performance and adjust indexes or schema as needed.

- Partner with an Oracle Managed Service Provider (MSP) for ongoing database optimization, monitoring, and performance tuning—often more cost-effective than maintaining in-house Oracle expertise.

Optimize Data Transmission for Large Data Tables

Even with an optimized backend, how data is transmitted to the user’s browser plays a crucial role in performance. Large payloads, inefficient delivery methods, or lack of transmission optimizations can still bottleneck table loading. But don’t worry! We’ve prepared actionable tips to help you manage data transmission:

Pagination and lazy loading

- Pagination: Splits data into smaller chunks and loads one page at a time.

- Lazy loading: Dynamically loads more data as users scroll or interact with the table.

How it helps:

- Reduces initial load times by fetching only the rows currently visible.

- Minimizes server and browser memory usage.

Example:

A table with 100,000 rows can display 50 rows per page using pagination, significantly improving initial load time. Lazy loading can enhance this further by preloading the next set of rows as users scroll.

Best practices:

- Implement server-side pagination for seamless performance with large datasets.

- Combine pagination and lazy loading for better user experience.

Compression

Compression reduces the size of data sent from the server to the client by encoding it more efficiently.

How it helps:

- Decreases payload size, resulting in faster data transfer.

- Especially effective for repetitive data, like table rows.

Example:

Using Gzip compression on a JSON dataset can reduce its size by up to 70%, allowing for quicker transmission to the browser.

Best practices:

- Enable Gzip or Brotli compression on your web server.

- Optimize data formats (e.g., use JSON instead of XML for lighter payloads).

Efficient APIs

Designing APIs to deliver only the data needed for the current table view, rather than the entire dataset.

How it helps:

- Reduces unnecessary data transfer, speeding up loading times.

- Makes real-time filtering and sorting more efficient.

Example:

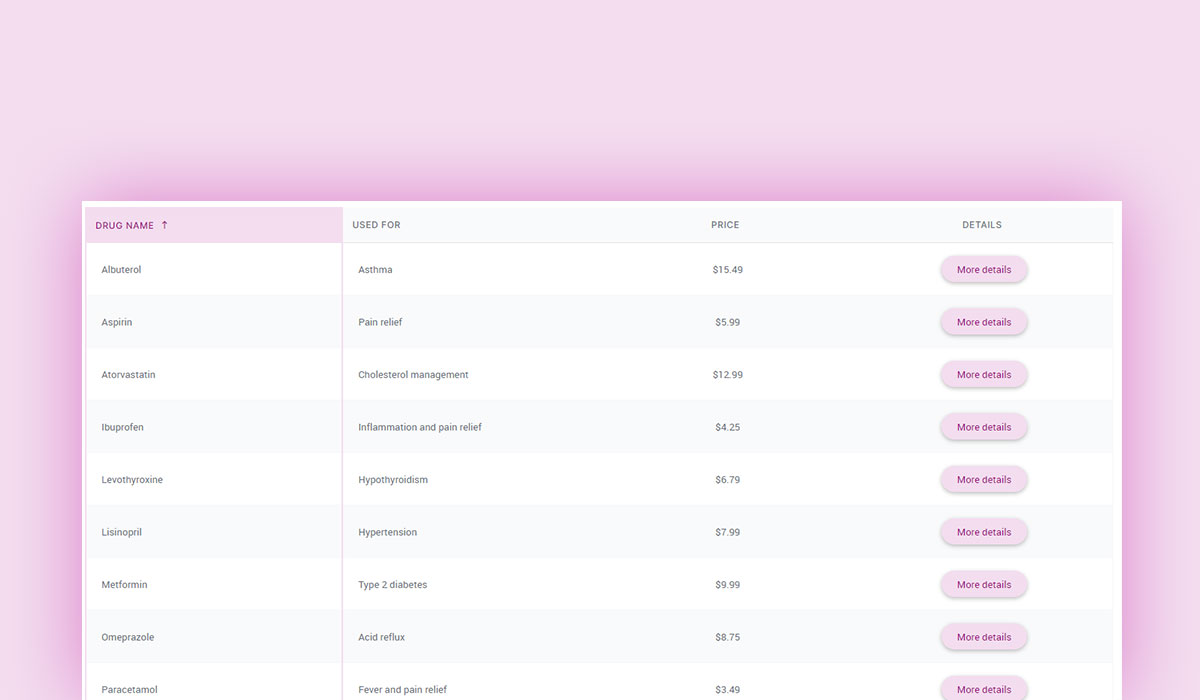

Instead of sending the entire dataset for a product inventory, an API could return only 20 items for the current page with relevant fields (e.g., product name, price, stock status).

Best practices:

- Use parameterized queries in APIs to retrieve filtered or paginated results.

- Ensure APIs return minimal metadata to keep responses lightweight.

Ensure Large Data Tables Load Quickly with Front-End Optimization Strategies

While backend optimizations are crucial, the user-facing experience also plays a significant role in table performance. Optimizing the way data is rendered and interacted with on the front end can make a huge difference. That’s why we’ve prepared separate strategies for frond-end optimization to ensure your large data tables load smoothly:

Virtual scrolling

Virtual scrolling involves rendering only the rows that are visible within the user’s viewport, rather than rendering all rows at once. As the user scrolls down, more rows are dynamically loaded.

How it helps:

- Reduces memory usage by not rendering the entire table at once.

- Improves rendering time, especially for extremely large datasets (thousands or millions of rows).

Example:

A table displaying a large number of customer records can render only the visible rows on the screen, while additional rows load as the user scrolls down. This keeps the page responsive and fast, no matter how many rows the table has.

Best practices:

- Use JavaScript libraries like React Table or ag-Grid that support virtual scrolling out-of-the-box.

- Ensure that data is loaded in manageable chunks (e.g., 100 rows per batch) to avoid overloading the browser.

Client-side caching

Caching stores a copy of data on the user’s device or browser, reducing the need to make repeated requests for the same data.

How it helps:

- Speeds up table interactions by loading data from local storage instead of the server.

- Minimizes unnecessary API calls and reduces server load.

Example:

If a user filters a product table by category, the data for that category can be cached on the client side. The next time they visit the page, the data is loaded almost instantly, without needing to query the server.

Best practices:

- Use browser storage (localStorage, sessionStorage) for lightweight caching of table data.

- Cache API responses and use versioning for cache invalidation to ensure fresh data.

Debouncing user actions

Debouncing involves delaying the execution of an action (such as a search or filter) until a user has finished interacting with the input. This prevents the system from triggering multiple requests for each keystroke or action.

How it helps:

- Reduces the number of API calls, preventing excessive load on the server.

- Improves the user experience by reducing lag when applying filters, sorting, or searching in a large table.

Example:

When a user types in a search field to filter table data, debouncing ensures that the search query only triggers once the user stops typing, rather than with every keystroke.

Best practices:

- Implement debouncing with a delay of around 300-500ms to strike the right balance between responsiveness and performance.

- Use JavaScript libraries that provide built-in debouncing methods for smoother implementation.

Efficient table libraries

Utilizing specialized JavaScript libraries optimized for rendering large tables can significantly boost performance. These libraries come with built-in features like virtual scrolling, lazy loading, and optimized DOM manipulation.

How it helps:

- These libraries are designed specifically to handle large datasets without compromising on speed or usability.

- Many of them also offer additional features like sorting, filtering, and pagination, which are optimized for performance.

Example:

wpDataTables, for WordPress users, allows you to easily manage large datasets while ensuring fast loading times through its built-in optimization features. Libraries like React Table or ag-Grid offer similar performance optimizations for large tables in non-WordPress environments.

Best practices:

- Choose a table library that supports performance-enhancing features like virtualization and dynamic loading.

- Ensure that the library is compatible with your framework (React, Vue, Angular, etc.) and optimized for large datasets.

Advanced Techniques to Ensure Large Data Tables Load Quickly

For sites dealing with massive datasets or requiring near-instant performance, basic optimizations might not be enough. These advanced techniques can give you even more control over loading speed and performance.

| wdt_ID | wdt_created_by | wdt_created_at | wdt_last_edited_by | wdt_last_edited_at | Technique | What It Is | How It Helps | Example |

|---|---|---|---|---|---|---|---|---|

| 1 | Tamara | 21/11/2024 12:22 PM | Tamara | 21/11/2024 12:22 PM | 1. Load Data Asynchronously via AJAX | AJAX enables background data fetching without refreshing the entire page, loading only necessary data as requested. | - Reduces the initial load time by only fetching the data that is needed. - Keeps the page responsive during data fetching. | An e-commerce product table that loads product details as the user scrolls, ensuring a smooth browsing experience. |

| 2 | Tamara | 21/11/2024 12:22 PM | Tamara | 21/11/2024 12:22 PM | 2. Virtual Scrolling for Extensive Datasets | Virtual scrolling renders only the visible portion of the table, and additional rows are loaded dynamically as the user scrolls. | - Reduces memory and CPU usage by not rendering the entire dataset at once. - Provides a seamless user experience even with massive datasets. | A financial dashboard displaying stock data where only the visible rows are loaded, and more are added as the user scrolls down. |

| 3 | Tamara | 21/11/2024 12:22 PM | Tamara | 21/11/2024 12:22 PM | 3. Utilize Content Delivery Networks (CDNs) | A CDN distributes content across multiple servers worldwide to deliver data from the server closest to the user. | - Reduces latency by serving data from the nearest server. - Speeds up load times for users in different regions. | A product catalog with static data, served via a CDN to provide faster load times globally. |

These advanced techniques allow for optimal table performance, especially when dealing with extensive datasets or a large number of concurrent users. By leveraging AJAX, virtual scrolling, and CDNs, you can ensure fast, scalable, and efficient table loading, regardless of your table size.

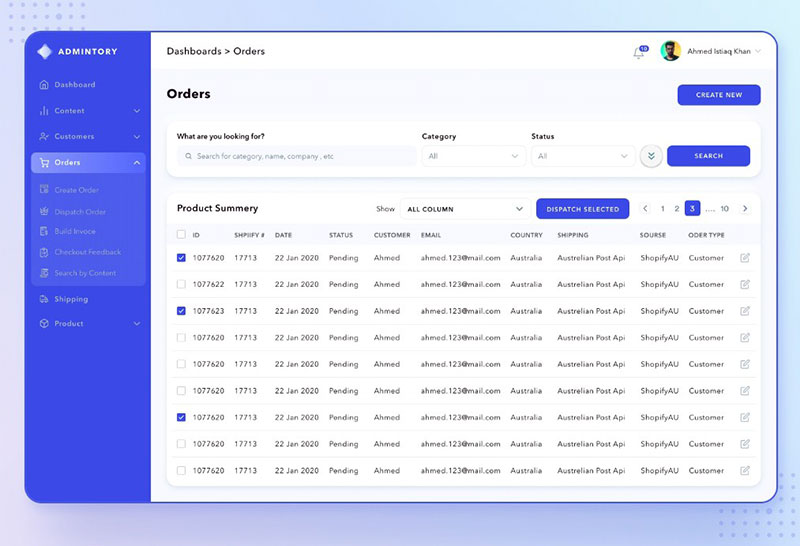

Use Specialized Plugins and Tools

When it comes to managing and optimizing large data tables in WordPress, specialized plugins and tools can significantly improve both performance and user experience. These tools offer built-in optimizations that can help you implement the strategies we’ve discussed so far, without having to manually code or handle complex configurations.

For WordPress users, wpDataTables is an excellent solution for quickly loading and managing large tables. Let’s explore how using the right table plugin can streamline your optimization efforts and improve table performance.

wpDataTables is a plugin designed for creating interactive tables, charts, and graphs in WordPress. It allows users to manage and display large datasets without compromising on speed and functionality.

How it helps:

- Automatic optimization: wpDataTables comes with built-in optimization techniques that help improve loading times, such as pagination, server-side processing, and caching.

- Easy integration: The plugin integrates seamlessly with WordPress, providing you with an easy-to-use interface to manage large datasets without needing to write code.

- High performance: It’s designed to handle large tables with thousands of rows, while ensuring that they load quickly and efficiently, even with heavy user interaction.

Example:

For a WordPress site running a large inventory table, wpDataTables allows you to create a responsive table that can display thousands of products while keeping the load time under control through features like caching and AJAX loading.

Benefits of plugins with built-in optimization features for large tables

Many WordPress plugins, like wpDataTables, include features specifically built to optimize large data tables. These features address issues like slow page loading, inefficient queries, and excessive server resource usage.

How it helps:

- Data caching: The plugin can cache large datasets so that the same data doesn’t need to be fetched from the database every time a user interacts with the table.

- AJAX loading: For tables that may contain a lot of data, AJAX ensures that only the relevant rows are loaded based on the user’s actions, instead of loading the entire dataset at once.

- Server-side processing: wpDataTables, for example, offers server-side processing, which means that sorting, filtering, and pagination actions are processed on the server, reducing the load on the client-side browser and speeding up user interactions.

Example:

If your table is frequently filtered or sorted, the plugin can process these actions on the server, rather than loading the entire dataset every time the user makes a change. This dramatically reduces loading times and makes the table more responsive.

In wpDataTables, you can configure the plugin to load only 100 rows at a time and then dynamically load more when the user scrolls. You can also use server-side processing for filtering and sorting, ensuring that these actions happen quickly, even with millions of rows.

| wdt_ID | wdt_created_by | wdt_created_at | wdt_last_edited_by | wdt_last_edited_at | Optimization Feature | What It Is | How It Helps | Example | Best Practices |

|---|---|---|---|---|---|---|---|---|---|

| 1 | Tamara | 21/11/2024 12:29 PM | Tamara | 21/11/2024 12:29 PM | Data Caching | Caches frequently accessed data to reduce the need for repetitive database queries. | - Speeds up load times by serving cached data. - Reduces server load and improves scalability. | Using wpDataTables to cache product data, so users can view it instantly without waiting for a reload. | - Enable caching for static or frequently used data. - Ensure caching is regularly updated to reflect changes. |

| 2 | Tamara | 21/11/2024 12:29 PM | Tamara | 21/11/2024 12:29 PM | AJAX Loading | Loads only relevant data when needed, without reloading the entire table. | - Reduces load times by fetching only the necessary data. - Keeps the table responsive. | Filtering a customer list table via AJAX so that only relevant records load without page refresh. | - Implement AJAX for large tables with complex interactions. - Combine AJAX with pagination for optimal results. |

| 3 | Tamara | 21/11/2024 12:29 PM | Tamara | 21/11/2024 12:29 PM | Server-Side Processing | Handles sorting, filtering, and pagination actions on the server rather than the client. | - Reduces browser load by processing large data on the server. - Speeds up table interaction. | Implementing server-side processing in wpDataTables for a financial data table with thousands of rows. | - Use server-side processing for large datasets. - Limit data fetched based on user interaction to speed up actions. |

By integrating plugins like wpDataTables into your WordPress site, you can take advantage of their built-in features that optimize performance, including data caching, AJAX loading, and server-side processing. These tools simplify the process of managing large data tables while ensuring that they load quickly and perform well, no matter the dataset size.

Conclusion

As we’ve seen throughout this blog, optimizing large data tables is essential for maintaining fast and responsive performance. From understanding the causes of slow loading times to employing advanced techniques like AJAX, server-side processing, and virtual scrolling, there are many ways to tackle this issue.

By following the techniques and best practices outlined in this post, you can ensure that your tables remain fast, even with massive datasets. Plugins like wpDataTables make these optimizations accessible and easy to implement for WordPress users, helping you avoid performance issues that could drive users away.

Take action now to implement these strategies, and don’t wait for slow load times to cost you users and opportunities. A fast, responsive table is just a few steps away.